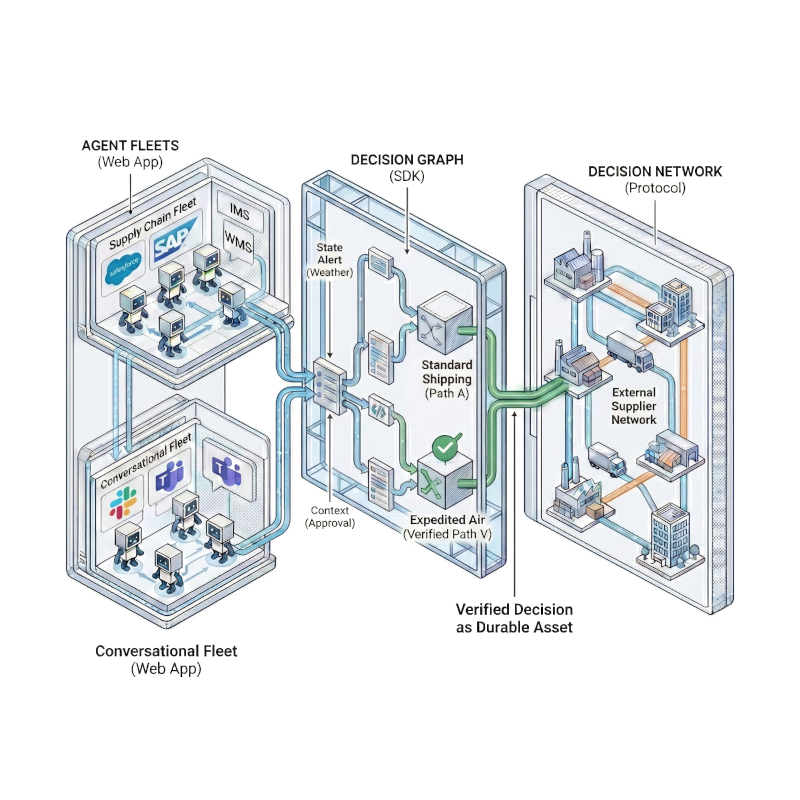

Framework-agnostic infrastructure for agents that execute across systems and organizations. Durable state, self-issued identity, and encrypted routing.

You own the compute. Summoner handles the coordination. Unlike hosted platforms that trap your agents, Summoner is a protocol that connects them.

Your agents run locally, connecting to existing systems (SAP, WMS, Slack)

Coordination produces durable, signed state — not fire-and-forget messages

Fleets discover and transact with external agents across organizational boundaries

MCP connects agents to tools. A2A connects agents to agents. SPLT coordinates agents across trust boundaries — with durable state, portable identity, and encrypted routing.

Define behavior. Summoner handles the network.

Framework-agnostic. Async-native. Turn any Python script into a network-coordinated agent. Compatible with LangChain, CrewAI, AutoGen, or raw LLM calls.

Compatible with LangChain, CrewAI, AutoGen, or raw LLM calls. We handle the plumbing; you handle the brains

Built on asyncio to handle thousands of concurrent negotiations without blocking.

Fully typed payloads, compatible with any native Python structure.

@agent.receive("ready --> action")

async def handle_action(ctx):

...

if ctx["status"] == "done":

return Move(Trigger.done) # send an event

# returning None results in no event

@agent.receive("action --> finish")

async def handle_finish(ctx):

...

if ctx["status"] == "in_progress":

return Stay(Trigger.in_progress) # send an event

else:

return Move(Trigger.OK) # send an event

@agent.send(

"ready --> action",

on_actions = {Action.MOVE}

)

async def send_msg():

# receive and send python native objects

return {

"qty": order.quantity,

"meta_data": {"ts": "01/01/2026"}} {

"host": "127.0.0.1",

"port": 8888,

"version": "rust",

"logger": {

"log_level": "INFO",

"enable_console_log": true,

"console_log_format": "\u001b[92m%(asctime)s\u001b[0m -

\u001b[94m%(name)s\u001b[0m - %(levelname)s - %(message)s",

"enable_file_log": true,

"enable_json_log": true,

"log_file_path": "logs/",

"log_format": "%(asctime)s - %(name)s - %(levelname)s -

%(message)s",

"max_file_size": 1000000,

"backup_count": 3,

"date_format": "%Y-%m-%d %H:%M:%S.%3f",

"log_keys": ["from", "to"]

},

"hyper_parameters": {

"connection_buffer_size": 256,

"command_buffer_size": 64,

"control_channel_capacity": 8,

"queue_monitor_capacity": 100,

"client_timeout_secs": null,

"rate_limit_msgs_per_minute": 1000,

"timeout_check_interval_secs": 60,

"accept_error_backoff_ms": 100,

"quarantine_cooldown_secs": 600,

"quarantine_cleanup_interval_secs": 60,

"throttle_delay_ms": 200,

"flow_control_delay_ms": 1000,

"worker_threads": 4,

"backpressure_policy": {

"enable_throttle": true,

"throttle_threshold": 50,

"enable_flow_control": true,

"flow_control_threshold": 150,

"enable_disconnect": true,

"disconnect_threshold": 300

}

}

}The server is an untrusted relay. It accepts connections, maintains the pipe, and broadcasts messages without seeing inside them. Validation lives at the edge (in your agents), while the server handles the physics of the network. Switch instantly between a Python core for rapid development or a Rust core for high-concurrency production environments.

Toggle between two interchangeable implementations with a single config flag. Use the default Python (asyncio) server for maximum compatibility on any platform, or drop in the Rust (Tokio) engine on Unix systems to unlock high throughput and advanced traffic shaping.

Gain insight without compromising secrecy. The logger’s log_keys parameter acts as a semantic filter, allowing you to record essential routing metadata (like route or id) while automatically pruning sensitive payload bodies from your infrastructure logs.

Map the runtime to your metal. The Rust server’s worker_threads parameter gives you direct control over the Tokio task scheduler, allowing you to tune concurrency to match your specific CPU quotas or container limits.

Prevent "noisy neighbor" agents from destabilizing your mesh. The engine enforces a three-stage defense—Throttle, Flow Control, and Disconnect—to gracefully slow down hyper-active senders before they can starve peers of bandwidth.

Your First Handshake in 2 Minutes.

Go from zero to a coordinated fleet without configuring a single server.

# start a local server, then run:

python first_server.py

# run the script:

python first_client.pyYou need a server to connect to — your local server (127.0.0.1), a teammate's machine, or a hosted server. Clients do not require special parameters to connect to either the Python or Rust server: the wire protocol is the same. The server's implementation is transparent to the client.

In Summoner, an agent is by definition a subclass of SummonerClient. You can define your own agent class by starting with a minimal subclass of SummonerClient and then add behavior.

from summoner.client import SummonerClient

class MyAgent(SummonerClient):

pass

agent = MyAgent()

agent.run(host="127.0.0.1", port=8888)#minimal receive

from typing import Any

from summoner.client import SummonerClient

agent = SummonerClient()

@agent.receive(route="")

async def recv_handler(msg: Any) -> None:

print(f"received: {msg!r}") # runs concurrently with senders

agent.run(host="127.0.0.1", port=8888)Agents typically do two things: receive messages from the server and react and send messages to the server (periodically or in reaction to input). Both are declared with decorators on the instance.

The simplest receiver behavior prints messages received from the server:

Receive handlers run concurrently with senders, and the function receives whatever other peers send (string or JSON-like dict).

The simplest sender can emit a message every second:

import asyncio

from summoner.client import SummonerClient

agent = SummonerClient()

@agent.send(route="")

async def heartbeat():

print("Sending 'ping'")

await asyncio.sleep(1.0) # sets the pace; sender is polled repeatedly

return "ping"

agent.run(host="127.0.0.1", port=8888)